It’s an extraordinary time for software development. Advances in large language models (LLMs), AI systems that understand and generate human-like text, are fundamentally reshaping how we build software. As Andrej Karpathy (former Director of AI at Tesla) recently put it, “programming computers in English is now a reality and it’s only the beginning.” This new paradigm means that natural language prompts can effectively write code, lowering the barrier to programming and accelerating development. Business leaders are taking note: LLM-powered tools are rapidly transitioning from experimental novelties to indispensable utilities in the software industry. In this article, we explore how LLMs are revolutionizing coding workflows and developer tools, examine future projections of partial autonomy in engineering (the idea of “autonomy sliders”), and discuss Karpathy’s vision of treating LLMs as utilities, operating systems, and even code factories. The goal is to provide a clear, executive-level understanding of why this AI-driven shift, often called the move from Software 2.0 to Software 3.0, is poised to transform software development and what it means for businesses.

The New Era of Software 3.0: Coding with Natural Language

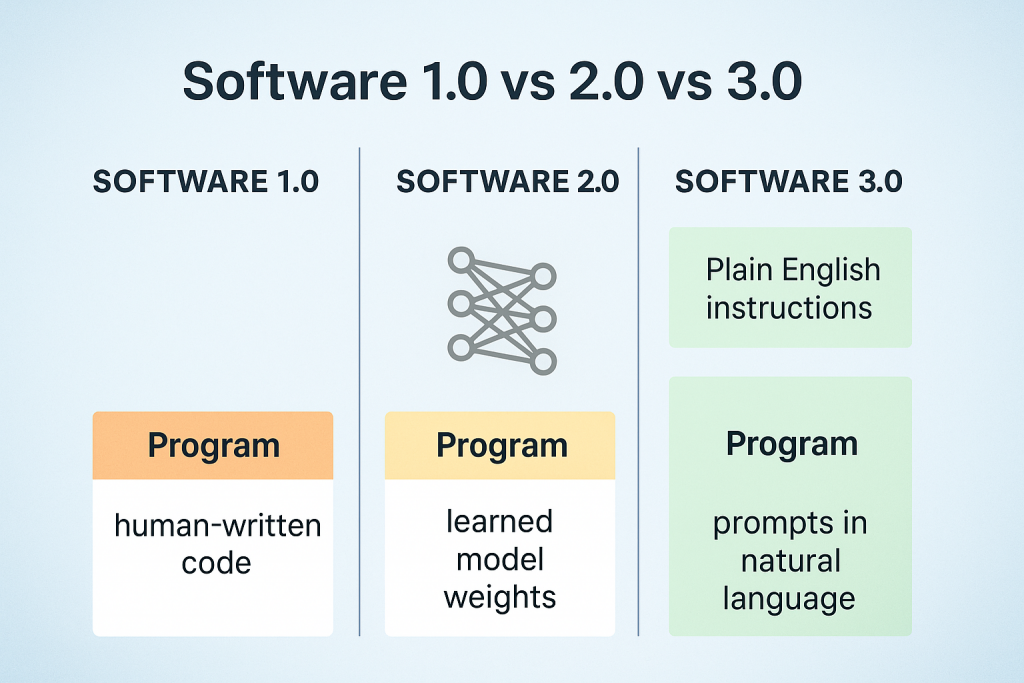

Karpathy describes the evolution of software in three eras: Software 1.0 (hand-written code, e.g. in C++ or Python), Software 2.0 (machine-learned models where developers curate data and algorithms tune the neural network weights), and now Software 3.0, characterized by LLMs that allow us to “code” by using natural language prompts instead of writing traditional syntax. In Software 3.0, the programming language is effectively English (or any human language), and the model interprets the intent to generate executable code or answers. This shift is democratizing software creation: no longer confined to professional programmers, virtually anyone can instruct a computer to build software using everyday language.

In practice, this means a non-developer could describe an idea for an app or a feature in plain English, and an AI system can produce workable code. We’ve seen early examples of “vibe coding,” a term Karpathy uses to describe building software by iteratively prompting an AI until the program “feels” right. This approach lowers barriers: prototyping an application becomes much faster, although deployment and fine-tuning still require traditional engineering tasks like setting up authentication, integrations, and infrastructure. For business leaders, the implication is huge: the pool of people who can create or prototype software is expanding, potentially enabling more innovation from diverse teams. Coding is turning into a higher-level creative activity, more about what you want to accomplish than about the exact syntax to make it happen.

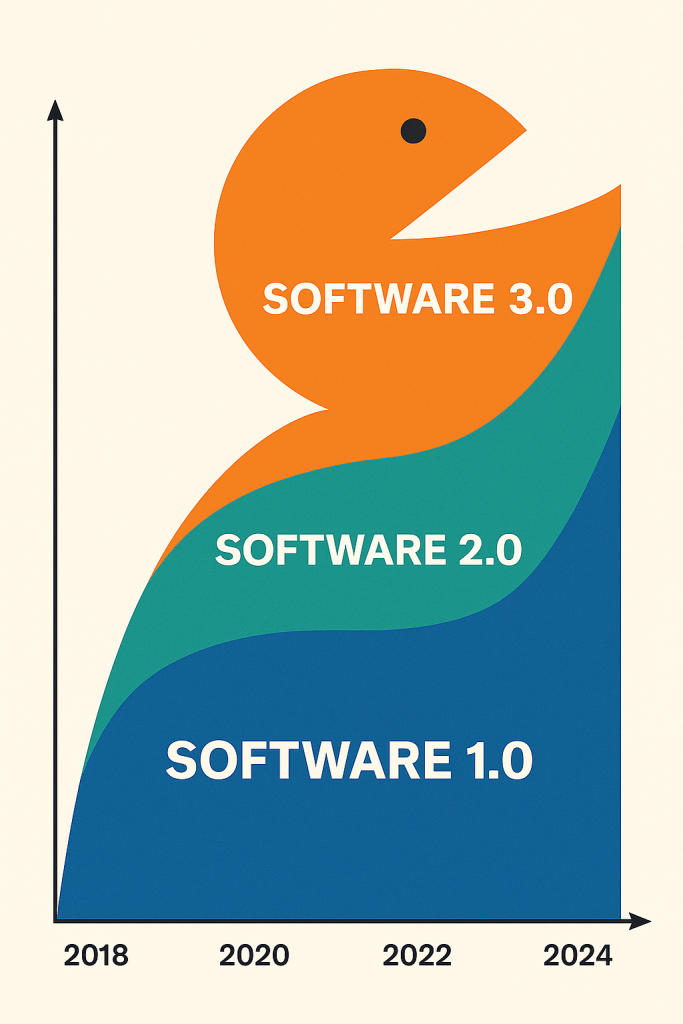

Karpathy likens this moment to the dawn of personal computing. Today’s LLMs are “a new kind of computer” in their own right. They represent a major software paradigm upgrade, not simply an incremental improvement. In his view, we’re on the cusp of rewriting “a huge amount” of existing software to adapt to this paradigm. Just as old code was refactored or replaced during past transitions (like mainframes to PCs, or on-premise to cloud), LLMs may drive a massive wave of software redevelopment – legacy Software 1.0 and 2.0 systems being supplemented or replaced by more dynamic, AI-driven Software 3.0 solutions. (For clarity, a visual could illustrate Software 3.0 “eating” Software 1.0 and 2.0 – e.g. a chart showing the growing portion of systems that incorporate LLM-based components over time.) The core message is that software is changing again at a fundamental level, and businesses that recognize and embrace this shift stand to gain a competitive edge.

The New Era of Software 3.0: Coding with Natural Language

Karpathy describes the evolution of software in three eras: Software 1.0 (hand-written code, e.g. in C++ or Python), Software 2.0 (machine-learned models where developers curate data and algorithms tune the neural network weights), and now Software 3.0, characterized by LLMs that allow us to “code” by using natural language prompts instead of writing traditional syntax. In Software 3.0, the programming language is effectively English (or any human language), and the model interprets the intent to generate executable code or answers. This shift is democratizing software creation: no longer confined to professional programmers, virtually anyone can instruct a computer to build software using everyday language.

In practice, this means a non-developer could describe an idea for an app or a feature in plain English, and an AI system can produce workable code. We’ve seen early examples of “vibe coding,” a term Karpathy uses to describe building software by iteratively prompting an AI until the program “feels” right. This approach lowers barriers: prototyping an application becomes much faster, although deployment and fine-tuning still require traditional engineering tasks like setting up authentication, integrations, and infrastructure. For business leaders, the implication is huge: the pool of people who can create or prototype software is expanding, potentially enabling more innovation from diverse teams. Coding is turning into a higher-level creative activity, more about what you want to accomplish than about the exact syntax to make it happen.

Karpathy likens this moment to the dawn of personal computing. Today’s LLMs are “a new kind of computer” in their own right. They represent a major software paradigm upgrade, not simply an incremental improvement. In his view, we’re on the cusp of rewriting “a huge amount” of existing software to adapt to this paradigm. Just as old code was refactored or replaced during past transitions (like mainframes to PCs, or on-premise to cloud), LLMs may drive a massive wave of software redevelopment – legacy Software 1.0 and 2.0 systems being supplemented or replaced by more dynamic, AI-driven Software 3.0 solutions. The core message is that software is changing again at a fundamental level, and businesses that recognize and embrace this shift stand to gain a competitive edge.

LLMs Transforming Coding Workflows and Developer Tools

The impact of LLMs is already being felt on the front lines of software development. Coding workflows are being supercharged by AI assistance. A growing array of developer tools leverage LLMs to make programming faster and easier. For instance, GitHub Copilot, an AI pair-programmer trained on vast codebases, can autocomplete code snippets or suggest entire functions as a developer types. This dramatically speeds up routine coding and helps reduce errors by suggesting best practices. Tools like Cursor (an AI-integrated code editor based on VS Code) go even further, allowing developers to write code using high-level instructions or questions. In Cursor’s editor, you can literally ask the AI to implement a feature or explain a section of code, and it will output the code changes or clarifications on the fly. Early adopters report churning out prototypes in a fraction of the time. In one case, a developer used Cursor to generate a to-do app’s UI and functionality in under 5 minutes, a task that might have otherwise taken hours.

Even beyond coding itself, LLM-driven tools are streamlining developer workflows. AI-assisted search engines and documentation tools like Perplexity provide instant answers to technical questions by reading through documentation and knowledge bases, saving developers from manual research. Instead of sifting through pages of API docs or Stack Overflow posts, a developer can query an AI assistant that cites the relevant docs and even gives examples. This kind of tool turns natural-language questions into actionable information, accelerating problem-solving and learning. Meanwhile, other platforms (Replit’s Ghostwriter, Amazon’s CodeWhisperer, and more) are integrating LLM capabilities to handle tasks like code review, test generation, and debugging suggestions. They act as tireless junior developers or intelligent search agents embedded in the development environment.

Crucially, these AI enhancements are translating into measurable productivity gains. Recent research on global coding behavior found that AI-generated code is becoming quite prevalent: in the U.S., an estimated 30% of new Python code on GitHub is now written with the help of generative AI tools. Developers who use these tools get more done: a 30% adoption rate of AI coding assistants corresponded with a 2.4% increase in quarterly code commits, which could equate to $10+ billion in annual value creation in the U.S. software industry alone. These numbers hint at significant efficiency improvements. In practical terms, teams can ship features faster and with potentially fewer bugs, as AI assistants catch mistakes or suggest improvements in real-time. For a business executive, such productivity boosts can mean faster time-to-market and reduced development costs: a key competitive advantage.

Moreover, LLM-powered tools are not just for seasoned developers. By lowering the expertise required to write code, they empower domain experts and other employees to contribute to software solutions. We’re seeing the rise of “citizen developers” who, with AI help, can automate processes or create simple applications without formal coding training. This can alleviate IT backlogs and spur innovation within organizations, as more ideas can be tried and tested. However, it’s worth noting that while LLMs make coding more accessible, professional developers aren’t going away, instead, their focus shifts. They spend more time on higher-level design, architecture, and refining AI-generated output, and less time on boilerplate code. In effect, human developers are becoming supervisors and collaborators of AI, guiding it with project-specific knowledge and ensuring quality control.

To handle this new collaborative workflow, new tools and best practices are emerging. In fact, there’s an ecosystem of AI coding assistants beyond the famous Copilot. Editors and IDEs are integrating AI through plugins and built-in features. For example, Cursor, CLINE, RooCode, Windsurf, and others have appeared as AI copilots for code, each with unique features. We even see meta-tools like “Rulebook AI” that aim to unify how these assistants operate, ensuring they follow software engineering best practices consistently. All these developments underscore that we are moving towards an era where AI is a ubiquitous part of the developer’s toolkit. Writing code is becoming less about typing every character and more about orchestrating AI suggestions and checking the results. Companies like Microsoft (with Copilot) and OpenAI are positioning LLM services as the next essential developer platform – akin to what cloud services were in the last decade.

LLMs as Utilities, Operating Systems, and Code Factories

Karpathy suggests that to truly grasp the impact of LLMs, we should consider them through multiple lenses. He argues that LLMs have attributes of utilities, operating systems, and even industrial-grade fabrication labs. This multi-faceted analogy is useful for a business audience to understand both the opportunity and the economics of this AI shift.

LLMs as Utilities: In many ways, AI models are becoming a new kind of utility, like electricity or water, that companies tap into on demand. Running a top-tier LLM requires massive computing infrastructure (data centers with specialized AI chips), similar to how electricity requires power plants and a grid. The upfront capital expenditure to train a leading model is enormous (often tens of millions of dollars, akin to building a power plant)

Once trained, the model’s intelligence can be delivered over the network via an API, and usage is metered (providers charge per 1,000 tokens or queries, analogous to a utility billing per kWh)

Just as businesses came to rely on electricity for every process, we are increasingly relying on LLM services for coding, customer support, analytics, you name it. However, at present LLMs might be considered a “non-essential utility”; if the power grid goes down, any factory grinds to a halt, but if an AI service goes down, a company can still operate albeit less efficiently. That said, this is changing fast some teams report that “if ChatGPT is down, work halts” for them. The demand for AI uptime, low latency responses, and consistent performance is growing, much like expectations for electricity reliability

We’re even witnessing “intelligence brownouts” – for example, when a major LLM API has an outage, it’s akin to a temporary loss of power for AI-driven operations.

This utility-like aspect underscores the need for robust AI infrastructure and possibly redundancy (companies might subscribe to multiple AI providers, similar to having backup generators). For executives, treating LLM access as a utility means planning for its cost (AI usage bills) and ensuring reliability – AI becomes part of the critical infrastructure of the business.

LLMs as Operating Systems: On another level, LLMs can be seen as a new AI operating system that applications run on. Traditional operating systems (Windows, Linux, etc.) abstract hardware and provide services for software; analogously, LLMs abstract vast knowledge and provide general-purpose “intelligence services” for applications. Developers are starting to build apps that call an LLM for reasoning, decision-making, or code generation, essentially using the model as the runtime brain of the app. Karpathy notes parallels to the 1960s era of computing here: today’s large models are mostly centralized in the cloud (like the mainframe era) and users interface with them via relatively thin clients (like time-sharing terminals). In this view, an LLM is the central “CPU” executing high-level instructions (in English), and the apps we build around it are like GUI wrappers and I/O handlers. Treating an LLM as an OS also highlights the emerging ecosystem and platform dynamics: for instance, different LLM providers (OpenAI, Google, Anthropic, etc.) offer models with varying “features” and performance, much like competing OS platforms.

There is some friction in switching between them due to differences in APIs and capabilities, but efforts are underway to standardize interfaces. Another OS-like attribute is that LLMs manage a form of memory and context (they maintain conversational state, and we talk about prompt tokens analogous to memory bytes). Karpathy even compares the prompt (user input) versus the model’s internal processing to the user-space vs kernel-space in an operating system. The key takeaway is that LLMs are becoming a foundational layer of software abstraction. Forward-looking companies might view an LLM (or ensemble of models) as part of their core tech stack – a strategic “AI platform” upon which many future products and features will be built, much like an operating system enables a universe of software applications.

LLMs as Factories (Code and Content Factories): Another striking comparison is to fabrication labs or factories. High-end LLMs require huge R&D investment and sophisticated “manufacturing” (model training is akin to an industrial process), which is why Karpathy draws an analogy to semiconductor fabs. Just as only a few companies can afford to build cutting-edge chip fabs, only a handful of players can currently “fab” the largest and most advanced AI models. This has implications for competitive moats and collaboration: AI expertise and computing infrastructure are strategic assets, similar to factory capacity. But on the output side, once you have a trained model, it functions like a factory for knowledge and code: you feed in ideas (prompts) and out come the “manufactured” products (be it a piece of code, a design, a report, etc.). In the software realm, an LLM can churn out code at a scale and speed that would require an army of human developers. This is why some refer to code generation by AI as “software factories”, for example, an AI can generate boilerplate code across an entire codebase in seconds, something that would take humans days. We should note, however, that AI factories, much like real ones, need quality control. The outputs aren’t guaranteed to be perfect, so you need processes (tests, human reviews) to catch flaws, akin to QA in manufacturing. Karpathy’s vision suggests a future where companies maintain an “AI factory” that continuously produces and refines software, guided by human engineers who specify requirements and verify outputs. In essence, LLMs can dramatically scale up production in knowledge work. For business leaders, this could mean being able to execute on more initiatives with the same human workforce – the AI handles the heavy lifting of drafting and producing content or code, while employees focus on oversight and guidance. It’s an amplification of human productivity analogous to the Industrial Revolution’s impact on manual labor.

All these analogies converge on the idea that LLMs are becoming a general-purpose resource in the economy of software and information. They have high upfront costs and complexity (like utilities and fabs), they provide a foundational platform for others to build on (like operating systems), and they massively accelerate production (like automated factories). Karpathy emphasizes that unlike some past technologies which were initially siloed or exclusive, LLMs have been “rapidly democratized” – thanks to cloud APIs, any individual developer or small startup today can access world-class AI on a pay-as-you-go basis, rather than only large corporations having this power. This democratization is why we see such an explosion of tools and startups leveraging LLMs; the “intelligence utility” is broadly available.

Partial Autonomy and the “Autonomy Slider” in Software Engineering

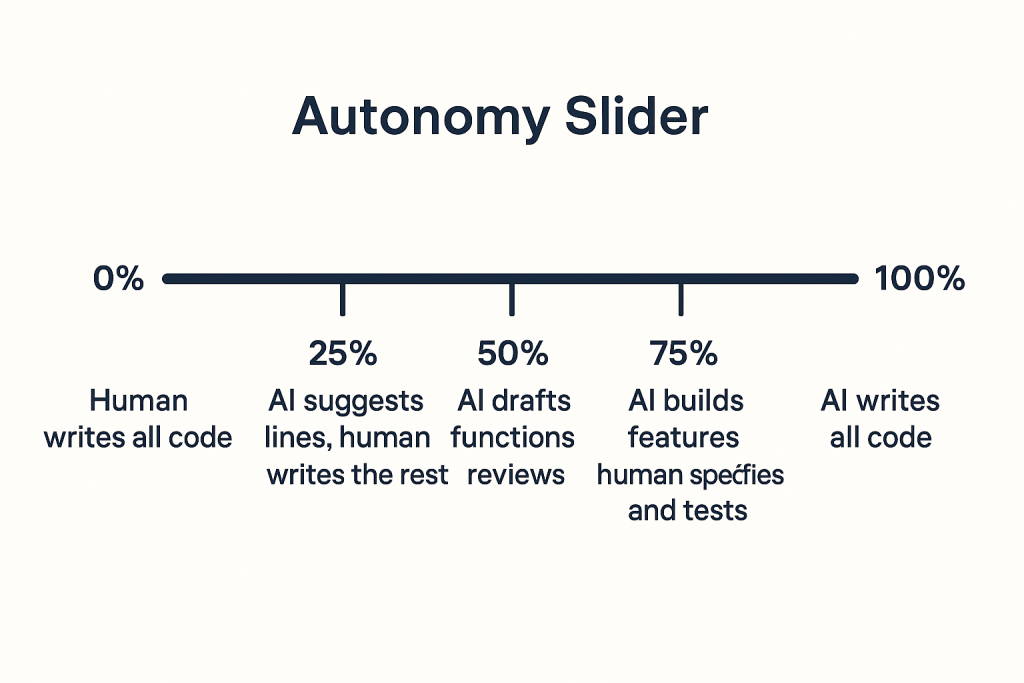

A critical question often arises: will AI fully automate programming, or will it augment human developers? The emerging consensus – and one Karpathy strongly advocates – is that the future is about partial autonomy, with humans and AI working together. Instead of aiming for a mythical “Coder AI” that replaces engineers entirely, the focus is on building AI copilots that can take on more of the grunt work while humans maintain steering control. Karpathy uses the concept of an “autonomy slider” to illustrate this continuum. On one end of the slider, you have manual coding (100% human, no AI assistance). On the far end, you have fully autonomous generation (AI doing everything end-to-end). In between lie various blends of human-machine collaboration and we can dial to the level of autonomy appropriate for the task and the comfort level of the user.

Today’s AI coding tools mostly sit somewhere in the middle of that slider. Take GitHub Copilot’s default behavior: it offers suggestions as you type, but the human accepts or rejects each suggestion, staying in the loop. This is low to moderate autonomy. Now consider more advanced systems: for example, the Cursor code editor has modes that effectively let you adjust the autonomy slider. In Cursor’s “Manual” mode, the AI will only act on specific instructions and locations you provide; you explicitly say what file to edit and perhaps even outline the change, and the AI generates code for you to review (very much human-driven). In the default “Agent” mode, however, Cursor’s AI can take a higher level goal (e.g., “implement a user login feature”) and independently figure out which files to create or modify, even executing test runs or terminal commands all with minimal guidance, essentially behaving like an autonomous junior developer. These modes map to points on the autonomy spectrum. A developer might start in manual mode to keep tight control, and as confidence in the AI grows, gradually let it handle more (switching to agent mode for broader tasks).

Karpathy’s vision is that software products themselves will expose such autonomy controls to users. In designing AI-powered applications, “apps should allow users to control how much autonomy they delegate”. For example, an email management AI might have a slider setting from “assist” (where it just suggests replies for the user to edit) up to “auto-pilot” (where it can send routine responses on its own). In coding, we already see this with tools that can either just hint at the next line of code or, if allowed, generate an entire module. The point is not to force a jump to full automation, but to build trust and gradually increase AI’s role as needed. Different projects and teams will choose different points on the slider – a safety-critical software project might always keep AI on a tight leash (lots of human verification), whereas a prototype project might crank autonomy higher for speed.

Karpathy uses a compelling metaphor from popular culture: he prefers Iron Man’s suit over an Iron Man robot. In the Marvel movies, the Iron Man suit augments Tony Stark (the human), giving him superhuman capabilities, but it’s ultimately Tony in control: it’s an extension of him. In some scenes the suit has autonomous features (like an auto-targeting system), but it works in concert with the human pilot. An Iron Man robot, by contrast, would be a fully independent AI warrior, with no human in the loop. Karpathy’s advice: build “Iron Man suits,” not “Iron Man robots.” In other words, design AI systems that augment human developers with partial autonomy, rather than trying to replace the human entirely. This approach yields practical and reliable improvements without waiting for sci-fi-level general AI. It acknowledges that current AI, while powerful, still makes mistakes or weird decisions (“hallucinations” in AI parlance), so having a human partner to guide and check it is invaluable.

Concretely, this philosophy shows up as fast iteration loops between human and AI. Karpathy notes the importance of a “fast generation–verification loop”: the AI can generate a candidate solution quickly, and the human immediately verifies or corrects it. This tight loop can dramatically speed up development while maintaining quality. Picture an AI writing 10 different versions of a function (in seconds) and the human picking the best one, the productivity gain comes not just from AI writing code, but from the human-AI team evaluating multiple options quickly. Many modern AI coding tools are embracing this: they’ll generate multiple suggestions or even open a pull request with several proposed changes, which the developer can then review and merge if acceptable. The human is still steering the ship, but the AI engine is providing a turbo boost.

From a business standpoint, the “autonomy slider” concept is reassuring because it means we’re not leaping into all-or-nothing automation; organizations can incrementally adopt AI assistance in development. Initially, a company might use AI for small code suggestions and automated documentation. As confidence grows and the tools improve, they might automate larger chunks of development (like an AI agent that can scaffold an entire new microservice based on specifications, with humans supervising). This gradual ramp-up is akin to how autonomous driving is being rolled out from driver assistance to partial self-driving, rather than straight to full self-driving in all conditions. Companies can therefore manage risk: keep the human in control where needed, but leverage automation aggressively where it’s safe and efficient to do so.

It’s also worth mentioning the UI/UX implications. Karpathy highlights the need for custom graphical interfaces to support this human-AI partnership. For example, in coding, having a visual diff of what the AI changed makes it easier for the human to verify the AI’s work. Or in an AI-driven data analysis app, providing a dashboard that shows which steps the AI has taken and confidence levels would help the user trust and fine-tune the process. Designing good “centaur” systems (where human + AI together outperform either alone) is an emerging art. Businesses might need to invest in new tools or training to fully realize the productivity gains – it’s not just the AI model, but how it’s integrated into workflows that determines success.

Karpathy cautions against overhyping fully autonomous “AGI agents” in the near term. While demos of AI agents that supposedly code entire apps or act like a developer persona are flashy, they often fall short on reliability. The likely path is more prosaic: steadily improving partial autonomy. Human oversight remains crucial for the foreseeable future, especially in complex projects. In effect, we’ll keep moving that autonomy slider to the right as AI gets better, but always with a hand on the dial. This balanced approach means companies can start reaping AI benefits now (with humans firmly in control) and increase autonomy gradually rather than waiting years for perfect automation.

Broader Implications and the Road Ahead

Stepping back, treating LLMs as utilities, OSes, and collaborative partners hints at broader business implications. Software development may never be the same. We are likely to see an era where much of the routine programming work is automated, and human creativity is applied more to defining problems, goals, and verifying solutions. Karpathy suggests we are in the “1960s of LLMs”: an early phase comparable to the dawn of computing, brimming with potential but still primitive in some ways. If that analogy holds, the coming decade could bring an explosion of applications and refinements:

Rewriting Legacy Software: Many existing software systems (the ones built in the Software 1.0 and 2.0 paradigms) will be upgraded or entirely rewritten with AI assistance. This doesn’t mean throwing away all old code, but we can expect new versions that have AI at their core: for example, user interfaces that accept natural language commands, or business logic that is continually optimized by AI analyzing usage patterns. Enterprises might undertake AI-driven refactoring of their codebases, where an AI helps modernize code (convert old languages to new, improve performance, fix bugs) at a scale previously impossible.

New Software Architecture Patterns: With LLMs as an OS or platform, developers will create architectures that leverage AI components for particular tasks. For instance, a SaaS application might use an LLM to interpret user requests (“write me a report on X”) and then call traditional code to execute it. We might see “AI services” become standard in software stacks: analogous to how a web app today includes services for database, caching, etc., tomorrow it might also include an AI reasoning service or an AI-generated documentation module. Companies should watch how software engineering best practices evolve in this hybrid human-AI era (e.g., how do you do version control for AI-generated code? How do you test AI suggestions? New methodologies will emerge).

Agent-Oriented Infrastructure: An intriguing idea Karpathy raises is preparing for “agent-first” infrastructure. If we have AI agents (software processes powered by LLMs) interacting with our systems, we may need to make our systems more legible and navigable to AI. For example, he floated the notion of websites including an lm.txt file (analogous to robots.txt) which provides instructions or documentation specifically for AI agents crawling or using the site. Likewise, APIs and documentation might be reformatted to be easily consumed by AI (machine-readable docs, standardized schemas) so that an AI agent can, say, learn how to use a new API on its own. Businesses that foresee offering services to be used by AI agents might adopt these practices early, making their platforms “AI-friendly” and thus more likely to be integrated by autonomous agent software. It’s a bit futuristic, but we’re already seeing hints: some companies expose OpenAPI specifications so that tools like GPT-4’s plugins can read them directly. The web may gradually adapt to have a parallel layer catering to AI consumers.

Workforce and Upskilling: As AI takes over certain coding tasks, the role of developers will shift. There will be growing demand for engineers who are adept at AI orchestration – understanding how to get the best output from AI (prompt engineering, tool integration) and how to validate and refine that output. We might also see new hybrid roles, e.g., a “AI software strategist” who decides which parts of a project to automate and how to evaluate AI contributions. Training programs will need to include working with AI tools, not just coding from scratch. For business executives, it will be important to encourage upskilling of technical teams in this direction, so they leverage AI instead of being displaced by those who do. On the flip side, non-engineering roles (designers, product managers, analysts) will benefit from learning some “AI development” skills too, since they can directly build simple apps or prototypes via natural language. This cross-pollination could break down some silos – more people in an organization can directly contribute to software solutions.

Productivity and Innovation Boom: If used effectively, LLMs can considerably raise a team’s productivity ceiling. Small teams can accomplish what previously took far larger ones. This can level the playing field in competitive markets – a startup with a handful of AI-enhanced developers might challenge an incumbent with dozens of traditional developers. For incumbents, adopting these tools internally becomes not just a matter of efficiency but of strategic necessity to stay competitive. We may also see a boom in software innovation because the cost and time to validate an idea will drop. When prototyping is as simple as “chatting” with an AI to build your MVP (minimum viable product), more ideas can be tried, and niche solutions can be developed profitably. In economic terms, LLMs could significantly lower the marginal cost of software production, which might lead to a proliferation of customized software (each team can have tools tailored to their exact needs, created with help from AI).

Quality and Maintenance Considerations: An area to watch is software quality and maintenance in this new regime. On one hand, AI can improve quality by catching errors and suggesting best practices learned from a huge corpus of code. On the other hand, if not managed well, it could introduce subtle bugs or security issues (imagine an AI writing code that works but has a hidden vulnerability because it picked up insecure patterns from its training data). There will be a premium on good oversight automated testing, code review (possibly AI-assisted code review, creating a double layer of AI checking AI), and monitoring in production. Maintenance might also change: instead of manually fixing bugs, developers might prompt the AI to generate a fix or even have continuous systems where the AI monitors logs and proposes patches. Karpathy’s note about “fast human verification loops” highlights that we must design processes where human judgment and automated generation intersect in a controlled way. This will ensure that as we speed up development, we don’t sacrifice reliability.

In summary, LLMs are set to become a ubiquitous part of how we develop and operate software. They are forcing a re-imagination of roles (human vs. machine in coding), tools (IDE features, new platforms), and processes (release cycles, maintenance, collaboration). Karpathy’s enthusiasm is tempered with practical advice: we should embrace the change (it’s a great time to be in tech, as he says), but do so thoughtfully – keep the human in the loop, design for gradual autonomy increase, and build supportive tooling around AI. Businesses that internalize these lessons can ride the wave of AI-fueled software innovation rather than be swamped by it.

Conclusion

Software development is entering a new era: one where AI is a co-creator alongside humans. Large language models have moved beyond research labs into the daily workflows of developers, transforming how code is written, reviewed, and even conceived. Andrej Karpathy’s “Software in the Era of AI” framework provides a powerful lens for understanding this shift. LLMs (Software 3.0) enable us to “program in English,” vastly expanding who can develop software and how fast it can be done. These models function like on-demand utilities and platforms, offering intelligence as a service that any app can plug into. They can act as an ever-ready junior developer, a knowledgeable architect, and a tireless QA tester – all in one – albeit one that needs guidance and guardrails from skilled people.

For the business executive, the message is clear: the rules of the software game are changing, and the winners will be those who adapt. Teams leveraging LLMs can accomplish more with less, iterate quickly, and tackle problems previously out of reach due to resource constraints. But reaping these rewards requires embracing the mindset of human-AI collaboration. That means investing in training your workforce to use AI tools effectively, updating processes to include AI (for instance, code review pipelines that incorporate AI analysis), and perhaps most importantly, fostering a culture that values experimentation with these new tools. Just as businesses had to transform with the rise of the internet or mobile computing, the rise of generative AI in software development calls for strategic adaptation.

Karpathy’s analogy of the Iron Man suit is apt to close on: think of AI as a powerful suit that can make your developers “superhuman,” not a robot to replace them. By putting the right tools in the hands of your talent, and calibrating the autonomy level, you can achieve feats of productivity and innovation that will define the next decade in tech. We are just at the beginning of this journey – akin to the early days of computers – and those who start learning and building now will shape the future. In Karpathy’s words, this is an unprecedented time to enter tech – so let’s build the future together.

References

-

- Karpathy, Andrej – “Software in the Era of AI” (YC AI Startup School talk, June 2025). Summary by noailabs on Mediumnoailabs.medium.com.

-

- Differentiated.io Daily AI News – “Andrej Karpathy highlights a new software era powered by LLMs…” (June 19, 2025), covering Karpathy’s analogies of LLMs as utilities, fabs, OS, and enabling partially autonomous productsdifferentiated.io.

-

- Emadamerho-Atori, Nefe – “The Good and Bad of Cursor AI Code Editor” (AltexSoft blog, June 25, 2025). In-depth review of Cursor and its AI coding features, including autonomy modesaltexsoft.com.

-

- GitHub Copilot Adoption Study – Snippet from arXiv research via Differentiated.io, showing AI coding tools’ impact: ~30% of Python code on GitHub may be AI-generated, with productivity gains and billions in value from these toolsdifferentiated.io.

-

- Karpathy, Andrej – AI Startup School full transcript (transcribed by donnamagi.com, 2025). Notable excerpts on “Iron Man suit” analogy and autonomy slider for AI in softwaredocs.google.com.

-

- Jared Friedman (@snowmaker) – Tweet highlights from Karpathy’s talk (2025), e.g. slide: “LLMs have properties of utilities…”.

-

- Karpathy, Andrej – Discussion on Hacker News (June 2025) around Software 3.0 talk, reinforcing the coexistence of Software 1.0/2.0/3.0 and gradual adoption of AI in codingnews.ycombinator.com.